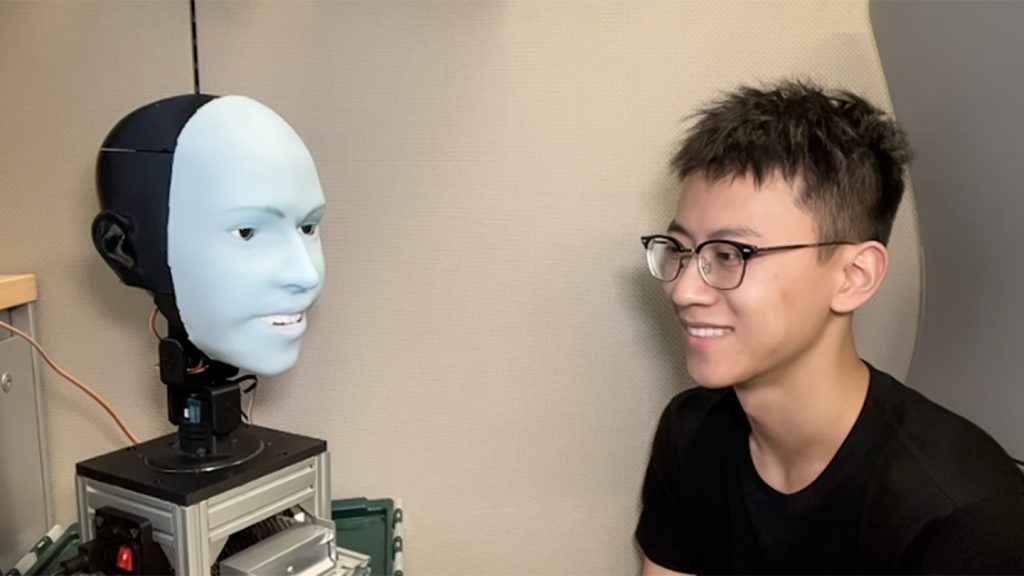

Emo the robot has smooth silicone skin and a blue appearance, making it look more like a mechanical version of the Blue Man Group than a regular human, except when it smiles.

In a study that was published on March 27 in Science Robotics, researchers explain how they taught Emo to smile at the same time as humans. Emo can anticipate a human smile 839 milliseconds before it happens and then smile back.

At present, most humanoid robots have a noticeable delay before they can respond with a smile, often because they are imitating a person's face in real time. Chaona Chen, a researcher in human-robot interaction at the University of Glasgow in Scotland, says, “I think a lot of people who are interacting with a social robot for the first time are disappointed by how limited it is. Improving the real-time expression of robots is important.”

By synchronizing facial expressions, future versions of robots could serve as a source of connection in our loneliness crisis, according to Yuhang Hu, a roboticist at Columbia University who, along with colleagues, developed Emo (SN: 11/7/23).

Cameras in the robot’s eyes enable it to detect subtleties in human expressions, which it then imitates using 26 actuators under its soft, blue face. To train Emo, researchers initially positioned it in front of a camera for several hours. As humans learn from looking in a mirror, so did Emo by observing itself in the camera while researchers activated the actuators at random, helping it understand the connections between activating the actuators in its face and the expressions they created. “Then the robot knows, OK, if I want to make a smiley face, I should actuate these ‘muscles,’” Hu says.

Following this, the researchers showed Emo videos of humans making facial expressions. By analyzing nearly 800 videos, Emo learned which muscle movements corresponded to upcoming expressions. Through thousands of additional tests involving hundreds of other videos, the robot was able to accurately predict what facial expression a human would make and replicate it in sync with the human more than 70 percent of the time. Besides smiling, Emo can produce expressions such as raising the eyebrows and frowning, says Hu.

Emo's timely smiles could alleviate some of the uncomfortable and unsettling feelings that delayed responses in robots may induce. Emo’s blue skin is also designed to help it avoid the uncanny valley effect (SN: 7/2/19). Hu explains that if people believe a robot is meant to look like a human, “then they will always find some difference or become skeptical.” However, with Emo’s flexible blue face, people can “think about it as a new species. It doesn’t have to be a real person.”

The robot currently does not have a voice, but integrating generative AI chatbot functionalities, like those of Chat GPT, into Emo could result in even more appropriate reactions from the robot. Emo would be able to anticipate facial responses from words as well as human muscle movements, allowing the robot to respond verbally, too. Nevertheless, Emo's lips need improvement. Current robot mouth movement often relies heavily on the jaw, which Hu says causes people to quickly lose interest and find it strange.

Once the robot possesses more lifelike lips and chatbot features, it could become a more enjoyable companion. Having Emo as company during late nights in the robotics lab would be a welcome addition, Hu says. “Perhaps when I’m working late at night, we can vent to each other about the workload or exchange a few jokes,” Hu says.