Elon Musk’s legal action against OpenAI may not be successful in court, but it’s already revealing the truth about the leading AI research organization.

The open aspect of OpenAI, we discovered this week, was more of a recruitment strategy than a commitment to open source. Regardless of how advanced its technology becomes, OpenAI will always have a motive to present its research as almost reaching artificial general intelligence, but not quite there.

As the two parties battle it out, Musk may well succeed in exposing OpenAI’s true nature as a for-profit, Microsoft-owned entity. In the meantime, the public will gain valuable insights into OpenAI’s establishment and development.

Here’s what we’ve learned so far:

OpenAI’s transparency was mainly a recruitment strategy

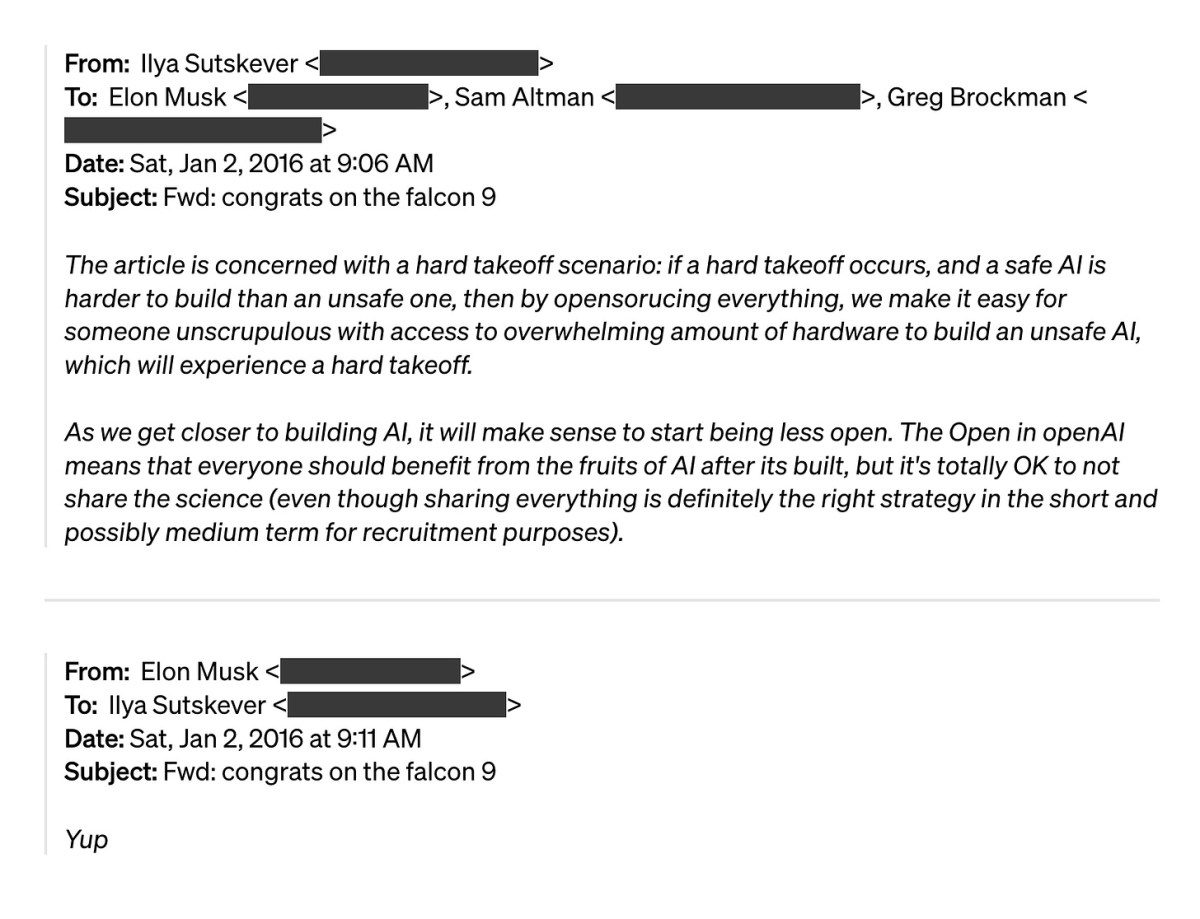

In an effort to counter Musk’s claim that OpenAI violated its founding agreement, OpenAI leadership released an exceptional internal email this week. On January 2, 2016, OpenAI chief scientist Ilya Sutskever stated that open-sourcing its research was not essential to its mission, and mainly a recruiting tactic.

“As we get closer to building AI, it will make sense to start being less open,” Sutskever wrote. “The Open in openAI means that everyone should benefit from the fruits of AI after its built, but it’s totally OK to not share the science (even though sharing everything is definitely the right strategy in the short and possibly medium term for recruitment purposes).”

Sutskever’s remarks contradict OpenAI’s early public portrayal as an open source organization (it is in the name, after all), and aren’t exactly aligned with the company’s founding document. “The resulting technology will benefit the public,” the document said, “and the corporation will seek to open source technology for the public benefit when applicable.”

OpenAI has since ceased open sourcing its largest GPT models. This may be because it believes it’s close to developing “AI” — or artificial general intelligence, according to Sutskever’s email — or because it’s using the pretext of being ‘close’ to keep its research confidential. And that's where things get complicated.

Microsoft complicates the founding agreement

A few years after the Sutskever email — which Musk agreed with, complicating his case — OpenAI secured a $10 billion investment deal with Microsoft that gives the tech giant a large share of OpenAI’s profits. However, the deal only applies to OpenAI’s technology before it achieves artificial general intelligence. OpenAI has now essentially trapped itself in a position where it must be near enough to AGI that it can’t share the research, but far enough away to keep generating profits for Microsoft. Musk’s lawsuit alleges that OpenAI has reached AGI, a questionable claim, but it’s uncomfortable either way. The case lays it out clearly. “Given Microsoft’s enormous financial interest in keeping the gate closed to the public, OpenAI, Inc.’s new captured, conflicted, and compliant Board will have every reason to delay ever making a finding that OpenAI has attained AGI,” the lawsuit says. “To the contrary, OpenAI’s attainment of AGI, like ‘Tomorrow’ in Annie, will always be a day away, ensuring that Microsoft will be licensed to OpenAI’s latest technology and the public will be shut out, precisely the opposite of the Founding Agreement.” I emailed Musk to ask if pushing OpenAI to make admissions similar to those in Sutskever’s email, which would highlight the tension in the company’s position, would justify pursuing the case. He didn't respond by the deadline. Musk against Google

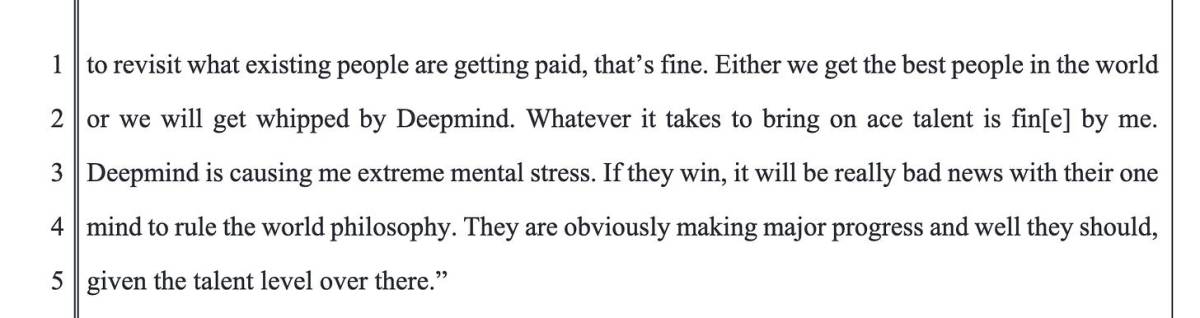

An interesting aspect of Musk’s case is his concern about Google’s AI intentions and his effort to counterbalance it with OpenAI. In an email to the OpenAI team in February 2016, Musk expressed, “Deepmind is causing me extreme mental stress. If they win, it will be really bad news with their one mind to rule the world philosophy.” The lawsuit also mentions a story of a Deepmind investor joking that shooting its CEO, Demis Hassabis, would be the best thing for humanity, given the risks of AI.

legendary tale

of one Deepmind investor joking that shooting its CEO, Demis Hassabis, would be the best possible thing they could do for humanity, given dangers of AI.

Now, consider the situation: Musk has had a tumultuous split with OpenAI, Google is developing technology similar to OpenAI's (though not without challenges), and Musk is now developing his own AI, Grok, which has left some unimpressed. In this context, a lawsuit is one way to redirect the technology back to Musk's original intentions. And while it might not change reality, it could certainly reshape the story about his former partner.

The company’s commitment to transparency didn’t go far beyond the name. It was a strategy for recruiting. apocryphal story of one Deepmind investor joking that shooting its CEO, Demis Hassabis, would be the best possible thing they could do for humanity, given dangers of AI.

Now, look a the landscape: Musk’s had a messy divorce with OpenAI, Google is building tech on-par with OpenAI (though not without its hiccups), and Musk is now building his own AI, Grok, that’s largely underwhelmed. In this context, a lawsuit is one option to bend the technology back in the direction Musk intended. And while it may not change reality, it could indeed reshape the narrative around his erstwhile partner.