My legions sprung to action the moment the digital analog of a starting gun fired. Strangers around the globe frantically dragged-and-dropped scanned shreds of paper on a virtual canvas, racing to reconstruct a shredded document. It was reminiscent of the scene from the movie Argo, in which Iranian revolutionaries enlisted children to reassemble thousands of documents shredded by United States Embassy employees.

We completed three documents in five days, at a breakneck speed that put us third among 9,000 competitors. We had just two documents to go. The secret to our success was our size and our system. My collaborator, computer scientist Manuel Cebrian, and I had created a platform that allowed thousands of individuals to work together on these scrambled documents. Plus, we rewarded assistance by enticing key players with a share of the $50,000 bounty, should we win.

However, my optimism faded suddenly on Day 5. My phone rang, and Cebrian shouted, “We are under attack!” I swung open my laptop and logged into the system, only to see thousands of man-hours of meticulous work disappear in seconds, as virtual paper scraps scattered before me in all directions. It was the first in a series of attacks sustained by our team, and marked the end of our winning streak.

Our crowd was competing in the “Shredder Challenge,” a 2011 competition organized by Darpa, the U.S. defense research agency. To them, it was a game with repercussions. The agency wanted to identify and assess new capabilities that might help the U.S. operate in warzones, as well as expose points of national vulnerability—for instance, those in which sensitive information contained in shredded documents could be revealed. The challenge asked participants to reconstruct five shredded documents of progressive difficulty, totaling 10,000 chads of paper. Many computer scientists tackled the challenge with advanced image processing programs, in which algorithms automatically aligned pieces by matching the patterns on the shreds’ edges. But Cebrian and I turned to the masses.

For years, we had been fascinated with the potential of crowdsourcing, the act of outsourcing a job to an undefined, large group of people in the form of an open call. We wanted to know if crowdsourcing might be able to address some of the most complex problems of our day, or if it was just the latest fad. In the beginning of the Shredder Challenge, we relished its power. But when we got attacked, we realized that crowdsourcing’s power is also its curse.

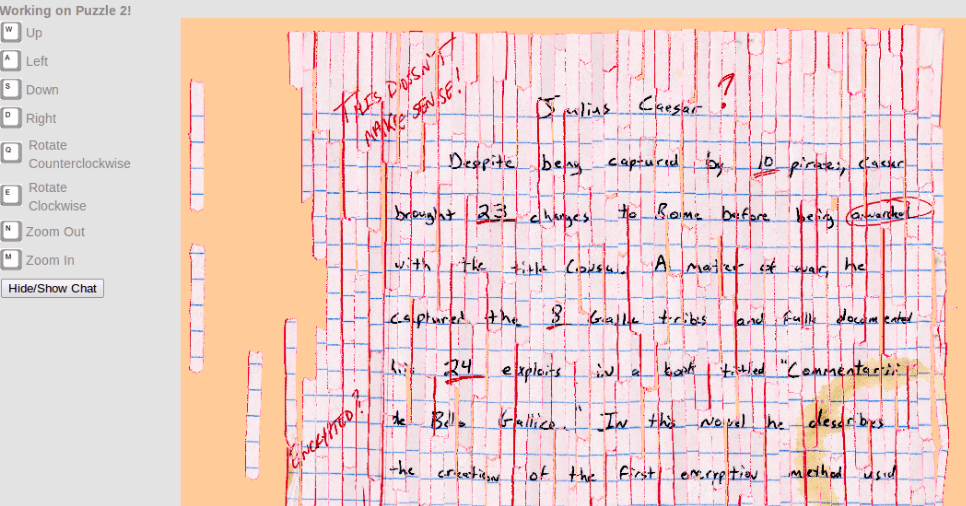

In this video (top), each circle represents a shred of paper in Puzzle #2. A screenshot of the actual, completed Puzzle #2 is below the video.

A Scavenger Hunt Bodes Well

In 2010, organizational theorists developed a statistical measure of group intelligence.1 They found that high collective IQ did not strongly correlate with the average or maximum individual intelligence of group members. Rather, it linked to the social sensitivity of teammates, the equality in conversational turn-taking, and the number of women in the group. They had analyzed teams of five or fewer, and their surprising results led me to consider how intelligence might scale as the connections between individuals multiplied and moved online.

Wikipedia demonstrates the potential of crowd intelligence online. The encyclopedia, which allows anyone with an Internet connection to edit it, is at least as accurate as professionally authored encyclopedias, and with an unmatched breadth. But if crowdsourcing is so powerful, why hasn’t it succeeded to the same extent in other realms?

This was the question on my mind when I arrived at the Massachusetts Institute of Technology (MIT) in 2010, and met Manuel Cebrian. He was a young, fast-talking Spaniard working as a postdoctoral researcher in the laboratory of Alex Pentland, a pioneer in mobile information systems. Our conversations were punctuated with Cebrian’s quirky, favorite exclamation, “BOOM!” The length of the “OOOO” signaled the impact he thought an idea or solution would have. In him, I immediately found a collaborator—and a friend—whose interests and enthusiasm matched my own. Cebrian had just completed another Darpa challenge in 2009, and together we dove into the post-play analysis.

The “Red Balloon Challenge” was one of Darpa’s first forays into social networking research. Participants were asked to find 10 red weather balloons tethered at random locations all over the continental U.S. The agency’s stated goal was “to explore the roles the Internet and social networking play in the timely communication, wide-area team-building, and urgent mobilization required to solve broad-scope, time-critical problems.” Cebrian took a novel approach to harnessing the masses. He devised an incentive scheme to reward participants who found balloons, as well as those who recruited valuable people through their network. Within nine hours, Cebrian and his minions had discovered all of the balloons, and won the challenge.

Even with advanced satellite imagery, smaller teams were unable to complete this task with the speed and accuracy Cebrian’s crowd displayed. After the match, Cebrian, Pentland, and I retraced the teams’ steps in order to learn how they had been so successful. In a report in Science, we revealed the key: Individuals didn’t simply blanket their acquaintances with requests, but they also selectively recruited distant peers who would search places they could not themselves reach.2

With these lessons in hand, Cebrian and I entered the “Tag Challenge” in 2012. In this contest, five actors disguised as jewel thieves hid out in five European and North American cities. To us, the challenge provided a chance to see how crowdsourcing would work in an international and more realistic scenario.

We orchestrated the manhunt from laptops in my dining room in Dubai, where we tapped into social media with an approach similar to the one Cebrian had used before. We also made the challenge easy to access, with a tailored Android smart phone app that made it simple for people to receive messages, recruit friends, and upload pictures of sightings.3

The strategy worked: Victory was ours again. Cebrian and I dreamed of how this process might be used to find people who have gone missing in a natural disaster, culprits in a crime, or in other critical operations.

Then It Gets Real

As we rejoiced in our success, we were reminded of a harsh reality. When two young men set off bombs during the 2013 Boston Marathon, crowds on Reddit misidentified the bombers, leading to the harassment of innocent individuals. Here was an example of how crowdsourcing had the power to do harm. In the Shredder Challenge, we had seen the potential for abuse as well.

At first, the invasions into our team’s puzzles in the 2011 challenge seemed like minor blips: Someone moved shreds to a different section of the virtual board where we could not see them. When we figured it out, we reverted the changes. Then the attacker piled pieces on top of one another, obscuring what was below. We countered by blocking this possibility. Next, they pushed pieces to the edge of the board where they were less visible, and eventually we found a way to offset this action as well. We then wrote computer commands to detect rogue behavior, but all of the interruptions had slowed us down and affected the crowd’s morale. Ultimately we lost the race, taking sixth place.

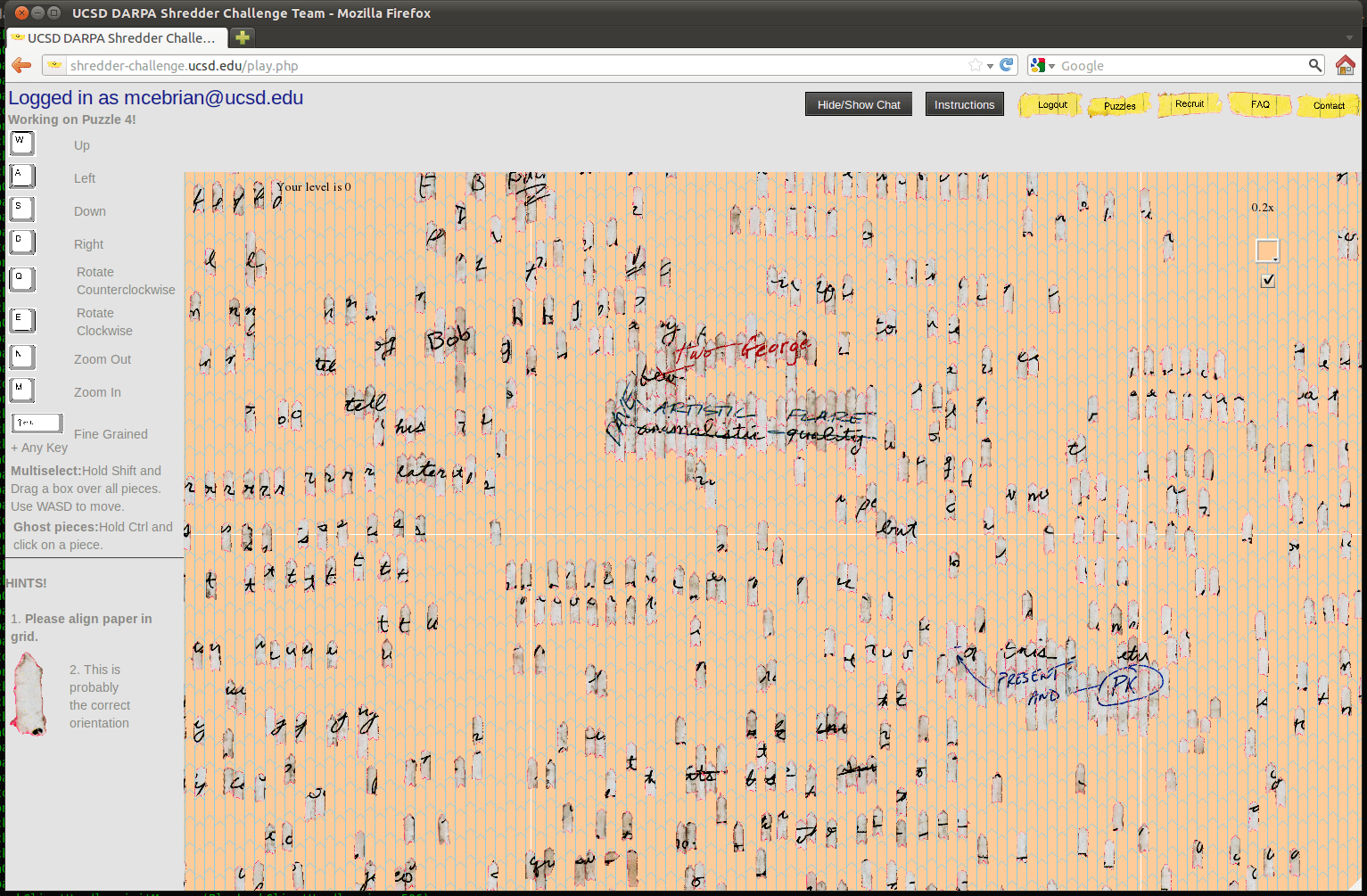

Iyad Rahwan’s team reconstructed their process of assembling shredded documents in time-lapse videos. In this video (top), each circle represents a shred of paper in Puzzle #4. The red circles represent shreds moved by an attacker. A screenshot of the actual, partially completed Puzzle #4 is below the video.

In our post-mortem analysis, Cebrian and I revealed how the crowd recovered efficiently from its own errors, fixing 86 percent of them in under 10 minutes.4 However, the crowd was hopeless against a determined attacker. Before the first attack, our progress on the fourth puzzle had combined 39,299 moves by 342 users over more than 38 hours. Destroying all this progress required just 416 moves by one attacker in about an hour. In other words, creation took 100 times as many moves and about 40 times longer than destruction.

First place was taken by a team of three using custom-designed software. They were far less vulnerable to invasion than we were with our oh-so-open platform. A few days after the contest had ended, our attacker emailed Cebrian from an anonymous address, admitting that he or she belonged to a competing group. They claimed to have recruited individuals through 4chan, an image-posting site whose users have been responsible for the formation of Internet memes like “rickrolling,” as well as the recent threat to release hundreds of nude photos without consent. Ironically, it is crowdsourcing that lends 4chan its power to do significant harm.

Online Attacker Reveals Hateful Motives

The 4chan crowd infiltrated our platform easily because we developed it with accessibility in mind. I still feel that open recruitment is the right engine to drive crowdsourced approaches, but now I see how it simultaneously acts as an Achilles heel, rendering projects vulnerable to attacks. Looking for the silver lining, Cebrian called the situation we witnessed “competitive crowdsourcing.” Rather than one individual trying to handicap their opponent in a match, one crowd applied their collective intellect to hampering another. But there were more surprises in the attacker’s email: They had an agenda.

Our attacker wrote:

“As for my motivation, I too am working on the puzzle and personally feel that crowdsourcing is basically cheating (and I’m not the only one that feels this way). Sure, if you get enough people together and working on it they will be able to solve nearly any puzzle. It’s only a matter of time, however for what should be a programming challenge about computer vision algorithms, crowdsourcing really just seems like a brute force and ugly plan of attack, even if it is effective.”

He reminded me of the Luddites in the 19th century, who destroyed the cotton- and wool-processing technology that they feared would replace man with machine. Only in this case, the concern was reversed: The attacker seemed to dislike how crowdsourcing puts the collective potential of humans above technology.

In retrospect, it might have been foolish to assume that every member of an anonymous crowd would act according to our best interests. Designing crowdsourcing systems for the real world will take as much strategic thought as designing markets or political institutions, each of these requiring regulations and checks-and-balances to operate. While we take for granted the way in which social media scales-up our ability to mobilize crowds in unprecedented ways, we must confront the challenge of ensuring those mobilized crowds do not fall prey to mobs.

A vision I recall from the Shredder challenge was a phrase we had reassembled, which I watched disappear as the attacker scrambled our work: “artistic flare.” The words are now what I imagine when trying to express the struggle between the creative, collective mind that emerges online, and the dark, almost eerie forces that antagonize all kinds of genius.

Iyad Rahwan is an associate professor at the Masdar Institute of Science and Technology in the United Arab Emirates, an institute established in cooperation with MIT.

References

1. Woolley, A.W., Chabris, C.F., Pentland, A., Hashmi, N., & Malone, T.W. Evidence for a collective intelligence factor in the performance of human groups. Science 330, 686-688 (2010).

2. Pickard, G., et al. Time-Critical social mobilization. Science 334, 509-512 (2011).

3. Rutherford, A., et al. Targeted social mobilization in a global manhunt. PloS One 8, e74628 (2013).

4. Stefanovitch, N., Alshamsi, A., Cebrian, M., & Rahwan, I. Error and attack tolerance of collective problem solving: The DARPA Shredder Challenge. EPJ Data Science 3, 1-27 (2014).